Good Questions Bad Questions

How to collaborate effectively with data teams

In his book Growth Levers and How to Find Them Matt Lerner recounts a story from his PayPal days where they had just launched a new payment system but the activation rate was only 10%:

It was 2005. PayPal had decided to expand and bet the whole company on a new product, which allowed merchants to process transactions on their own sites via card processing APIs....Thousands signed up, but few used it: the activation rate was just 10%.

He was tasked with increasing activation past 40% but had no idea how to get that number. He decided to talk to a customer who had NOT activated yet and see if he could figure out why.

So I asked, “Why haven’t you processed any transactions?”

“Well, I don’t have any customers yet,” she said. Her website had been live for six months with zero sales. Her business was going nowhere. I realized the problem: many of our customers had no customers of their own. And I couldn’t “growth hack” my way around that.

That night, I asked my favorite analyst, Igor: “How much of our revenue comes from our top 10% of signups?”

Before continue, let’s pause here and look at that question. It’s a VERY good question. But he could have also asked “Can you build me a dashboard with our top selling customers?” That is a BAD question. Why? We’ll get to that in a bit.

Let’s get back to the story.

An hour later, he sent me a spreadsheet with the answer: almost all of it. It didn’t matter whether we activated 30% or 40% of our new customers. What mattered was activating the right 10%.

We changed our entire approach. We developed a predictive model to score new signups on their propensity to become valuable customers. We’d try to increase revenue by giving high-scoring signups a white-glove onboarding experience, walking them through setup and teaching them to use the system.

It turns out the distribution of activated customers was nowhere near normal, in fact it was quite lopsided. 90% of revenue cam from only 10% of all clients. That’s what an actionable insight looks. It tells you something important and suggests clear action.

Later on they developed an experiment to see the impact of the white-glove experience which turned out to be huge. More than 40% of the accounts that got the white-glove experience vs the automated emails activated which prompted them to roll it out to everyone to the tune of $100M in additional ARR.

Such is the power of a good question!

Good Questions Bad Questions

It’s a well-known fact that many data teams are overloaded with requests. Everyone is trying to validate some hypothesis, get a report or dashboard made or quench some curiosity. Since we don’t know the cost of each question — and the data team isn’t voicing their concerns — we feel like we can ask anything.

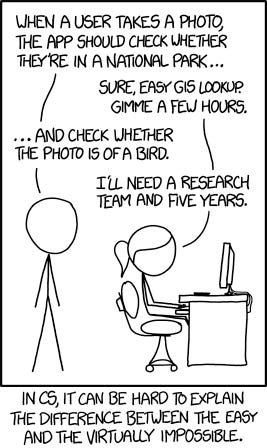

What to you as a leader might sound like an interesting question, to the data team it’s either an hour worth of effort to run some queries or a two week effort to build a brand new pipeline to bring data into the data warehouse, clean it, model it and then run a query.

Not all questions are the same. Some questions lead to productive insights while others are mere curiosities. Without a good filtering mechanism in place you will waste valuable data team time producing insights that will be discarded as soon as they’re created and thousands of dashboards and reports nobody uses strewn across your data platform.

Many startups have sprung to try and fix this problem with technology. Their approach focuses on reducing the costs of answering questions via automated systems. These solutions range from BI tools like Tableau and Looker to semantic layer tools, text-to-SQL LLM based systems and the “AI analyst”

There’s nothing wrong with technological advancements, but I’m doubtful these solutions will work long term. As I’ve stated before the issue is “induced demand” where the more easy questions you can answer, the more exotic questions you’ll get.

So what’s the solution then? How can you tell the difference between good questions and bad questions? How do you make sure you’re asking good questions — like that question we saw above— that lead to fruitful collaboration with your data team?

Asking good questions

A good question is driven by an underlying hypothesis about how the business grows while empowering the data analyst to apply their expertise. Analysts on the other hand should demand good questions.

“How much of our revenue comes from our top 10% of signups?” is a good question because:

The underlying causal model of the business growth is well understood. Increasing signups by 40% revenue will increase by X% and in order to increase signups we have to understand the underlying distribution of activated accounts. If the distribution is uneven and the power law applies the entire approach to growth changes

Matt could clearly articulate his hypothesis when asked “why.” He would be able to say “I have a hunch that the distribution of revenue is extremely lopsided 80/20 style. If that’s true, we’re wasting our time trying to activate everyone. We can focus our efforts on the people most likely to activate and figure out how to get more of them. If not we keep looking.”

It empowers the analyst to continue spelunking if the initial approach didn’t uncover any useful insights. Suppose that the top 10% doesn’t cover a lot of the revenue but when you look at the top 20% you might find the key

“Can you build me a dashboard with our top-earning customers?” is a poor question that hints at the same underlying model but it disempowers the data analyst. It tells them nothing about what your assumptions are and it treats them as a as analytics service desk.

That’s it for this week. I will explore this topic more in the future.

Until next time.

“the issue is ‘induced demand’ where the more easy questions you can answer, the more exotic questions you’ll get”

This observation really hit home for me. Exotic questions will then require an outsized portion of time, and typically yield a low volume of actionable insights / results.

How do you recommend teams push back against these requests?