The Evolution of Data Practice: Data Strategy Playbook - Part 2

Strategic gameplay in the data landscape

Hello and welcome to the latest issue of Data Patterns. If this is your first one, you’ll find all previous issues in the archive.

In the last edition we started talking about making sense of the data landscape using Wardley Maps with a quick introduction to the tool.

In this edition, we’ll discuss the evolution of the practice of data, how it led me to the insight that kicked off the Metrics Playbook (now SOMA) and how we’re using a powerful gameplay (open source) to accelerate this transition.

How Data Practice Evolves

Data practice is a collection of patterns of work that data teams consistently use in their day to day work. It can be how you do data engineering, how you do data analysis, how you write your queries, how you build your dashboards, how you present findings. etc.

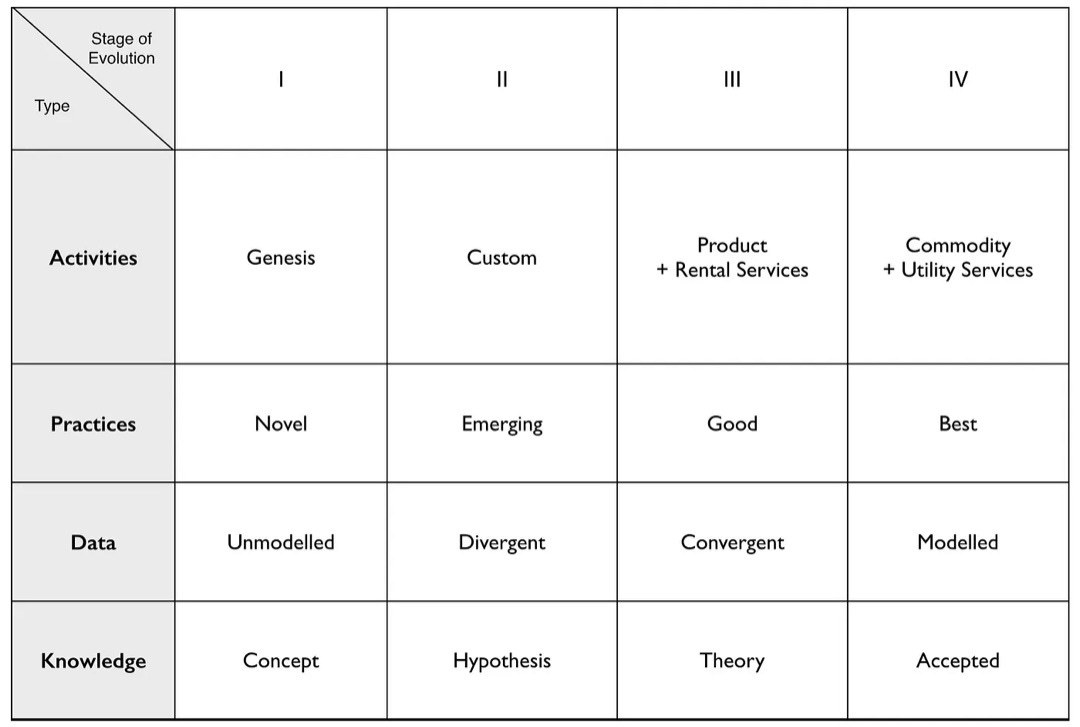

If you recall from the previous issue on Wardley Maps, I mentioned that the elements in the map naturally evolve from left to right. Activities move from Genesis, when they’re first discovered, towards becoming a commodity. Similarly practices evolve from Novel Practice, to Emerging Practice, to Good Practice to Best Practice.

Let’s illustrate how it works:

Back in 2012 DJ Patil and Thomas Davenport wrote in the Harvard Business Review about Data Science as the “sexiest” job of the 21st century. Data science has its roots in a set of ideas and algorithms called Data Mining, which was a very novel practice in the 90’s and early 00’s.

Note: Data mining has its roots in statistics and computer science but we don’t need to go that far to illustrate the evolution of a practice.

At that time, data science was still an emerging practice. There were no books written about it, no blog posts, no courses and no college degrees. The tools were very scarce and very expensive. (anyone remember SAS and SPSS?)

In fact when I decided to get into Data Science back in 2015, the only resources I could find were academic books about data mining and statistics. I found just one book that discussed practical applications, and it was about credit scoring in banks.

Compare that with today’s landscape. There are many freely available tools (Python, R, Julia, Jupyter, etc), hundreds of books, hundreds of courses, thousands of blog posts, and YouTube videos and many college degrees.

We can confidently say that Data Science has moved past the Novel and Emerging stages and is making inroads into the Best Practice Stage. By the way, a good indicator of evolution in a field is the creation of college degrees. When colleges start offering degrees in a field, it’s already past the Emerging stage.

When I read this in Wardley’s book, I immediately thought “Hmm if everything evolves then data practice must also evolve and become standardized and commoditized” I posted some tweets about it and got some very good engagement on social media. In fact that’s how I connected with Abhi Sivasailam.

He had the same idea—commoditizing analytics—but had gone much further than me and already had ideas on how to do it. As we discussed this with other people, we discovered that we weren’t the only ones who came up with the same insight. All that remained was coming up with a strategy on how to propel it forward.

That’s where climatic patterns come in.

Climatic Patterns

In his book, Wardley identified a number of what he calls Climatic Patterns. These are things that change the map regardless of your actions. It could be competitors making moves or consumers’s tastes changing. I won’t list every single one here (there’s quite a few of them) but I’ll list a couple that apply to our situation.

1. Everything evolves

All the components of the map are evolving from left to right as a result of supply and demand competition. It’s important to note that without supply and demand, there would be no evolution. To understand that simply look into abandoned programming languages.

Wardley put it best:

Everything evolves from that more uncharted and unexplored space of being rare, constantly changing and poorly understood to eventually industrialised forms that are commonplace, standardised and a cost of doing business

2. No choice in evolution

While I understood the pattern of evolution quite well (I could see many examples of it happening) this one was quite puzzling. How come I have no choice in this evolution?

The root of this pattern is an idea borrowed from biology known as the “Red Queen effect” which proposes that species must constantly adapt, evolve and proliferate in order to survive an ever-changing environment and other evolving species.

Again a quote from Wardley’s book:

As components within your value chain evolve, unless you can form some sort of cartel and prevent any new entrants then some competitors will adapt to use it whether utility computing, standard mechanical components, bricks or electricity

3. Efficiency enables innovation

As a practice becomes standardized it enables novel practices that would have not been possible or even visible until standardization. For example the standardization of data science Python libraries (like NumPy, SciPy and Pandas) led to the creation of more advanced libraries for deep learning such as Keras, TensorFlow and PyTorch.

As Wardley puts it:

In the Theory of Hierarchy, Herbert Simon showed how the creation of a system is dependent upon the organisation of its subsystems. As an activity becomes industrialised and provided as ever more standardised and commodity components, it not only allows for increasing speed of implementation but also rapid change, diversity and agility of systems that are built upon it

Now we know that data practice would eventually evolve, but how long will that take? Wardley is quite resolute in his claim that this evolution is inherently unpredictable. We know what will happen but not when it will happen.

4. Past success breeds inertia

Inertia is a calcification of ways of doing business. This makes it nearly impossible for companies to keep up with the latest best practices.

Here’s Wardley again:

Despite any pressure to adapt, you and your industry are likely to resist its industrialisation and your enjoyment of such wealth creation. You want to stay exactly where you are. This resistance to movement is known as inertia.

It’s a lot easier for a startup to adopt a standard like SOMA than a 1000+ person company. This remains by far the biggest challenge we face with adoption of SOMA. But if we gain enough momentum, it will become a hindrance to not adopt it.

It is almost always new entrants who are not encumbered by past success that initiate the change.

So what can we do? Is there a way to accelerate this evolution? Can we bypass this inertia? Our vision is that once this standard is adopted widely in the industry, it will enable innovation in other, as-yet unseen areas of analytics.

That’s where context specific gameplay comes in.

Strategic Gameplay

Two of the most interesting gameplays are accelerators and de-accelerators (that’s not a typo by the way). Accelerators move something forward through the stages deliberately while de-accelerators try to prevent this as long as possible.

Accelerators

If you recall earlier we talked about the evolution of data science. One of the key enablers of this was the free & open source R and Python programming languages and many of the free and open source packages available for them. Before this you had to shell out hundreds of thousands of dollars for proprietary software.

It seemed that the very act of open sourcing, if a strong enough community could be created would drive a once magical wonder to becoming a commodity. Open source seemed to accelerate competition for whatever activity it was applied to.

Here are a few context specific gameplays that can accelerate adoption:

Open sourcing data and/or code

Exploiting network effects

Cooperation

etc.

We’re trying to use all of them for SOMA. We’re open sourcing our metrics and analytics stack, we’re cooperating with other players in this space and using network effects where possible.

De-accelerators

Just like there are accelerator gameplays, there are also de-accelerator gameplays, often used by incumbents to protect their marketshare. Some common ones are:

Creating constraints

Fear, uncertainty and doubt (FUD)

Intellectual Property Rights (IPR)

Government policies

etc.

For example certain tool vendors might get a law or policy passed that will not allow the use of free, open source statistical analysis software for certain industries. During the early 00’s when open source Linux was becoming more available, many software vendors spread FUD about these tools by claiming they were easier to hack because they were open source.

As unpleasant as that seems, it’s not uncommon.

That’s it for now, I’ll write more about this topic because it’s of very high interest to me and I hope to you as well.

Until next time.