How to make SQL queries faster

Query performance patterns that save you money + I'm back!

I’m back after a brief hiatus last month, ready to fill your inbox with new data patterns as I discover them.

Today we’re going to be talking about my favorite subject, improving query performance. I have to admit I’m quite addicted to solving these problems.

So let’s get to it.

The importance of query performance

With the advent of “unlimited” compute and storage on cloud data platforms (CDPs) like Databricks, BigQuery, Snowflake, Redshift, etc. it seems like query performance is no longer a concern. In fact these mammoth databases are capable of scanning hundreds of Gigabytes of data in mere seconds.

Great you say, query away….but wait there’s catch!

While your queries are fast now, there’s no telling they’ll be fast as the volume of data increases or as data workflows become more complex.

You can’t solve this problem through clever design given that most of this behavior is emergent and unpredictable. You can’t predict how a table or dbt model you build today will be used tomorrow.

With increased data volumes and complexity comes another insidious problem: namely cost. Cloud data platforms will more than happily run your 200GB query and return results in a couple of minutes but every time this query runs you pay $$$.

Making queries faster is no longer just about performance. It is imperative to saving you money in the long run.

If you’ve read my book (Minimum Viable SQL Patterns) you’ve already seen some of my Query Performance patterns.

Let’s recap before talking about some new stuff.

Reducing Rows

This one is pretty straightforward. The fewer rows you scan, the faster your query runs. The most common way to do this is through the WHERE clause. If you only need a month’s worth of data, then make sure you bake in a “sliding bounded window” as I called it in the book:

SELECT col1, col2, col3

FROM table

WHERE last_updated between today - 30 days and today

I’ve recently come to learn another pattern for reducing rows that I didn’t originally think about. You can also reduce the number of rows by aggregating data to a coarser grain, especially once you realize that the raw data is repetitive in predictable ways.

SELECT col1, col2, col3, max(last_updated)

FROM table

GROUP BY 1,2,3

I was able to reduce the data being processed in a multi-million row table by a factor of at least 5 thus reducing a query from 2.5 hours to 15 minutes by pre-aggregating data. I’m using PostgreSQL so I’m not being charged for processing time, but in any other environment this could mean significant savings.

Reducing Columns

If you ever use SELECT * FROM table you should think twice about deploying it to production. I actually treat it as a “code smell” which means when I see it I immediately pay attention to it to see if the usage makes sense. Often the answer is no.

Getting all the columns from a table is almost always unnecessary. This is even more important in columnar databases like the CDPs mentioned above. They store data by columns so the more columns you choose the more data you scan.

There are a few scenarios where SELECT * FROM table might make sense.

In views. It’s generally accepted to have SELECT * FROM table in a view since the schema might evolve and you want the view to always be up to date.

When using NOT EXISTS. If you want to filter table A by referencing table B and you use NOT EXISTS it’s usually followed by SELECT * FROM table.

Delaying Ordering

Another “code smell” is using ORDER BY prematurely in a CTE or subquery (please don’t use subqueries unless you have to! Sorting data is a pretty expensive operation especially if the data is very large so avoid it as much as possible.

My rule of thumb is to never use ORDER BY in a query unless I’m forced to. When would you be forced to use it? Well if you use window functions you’re often required to use ORDER BY. That WILL slow your query down.

Watch out for Hidden Distinct

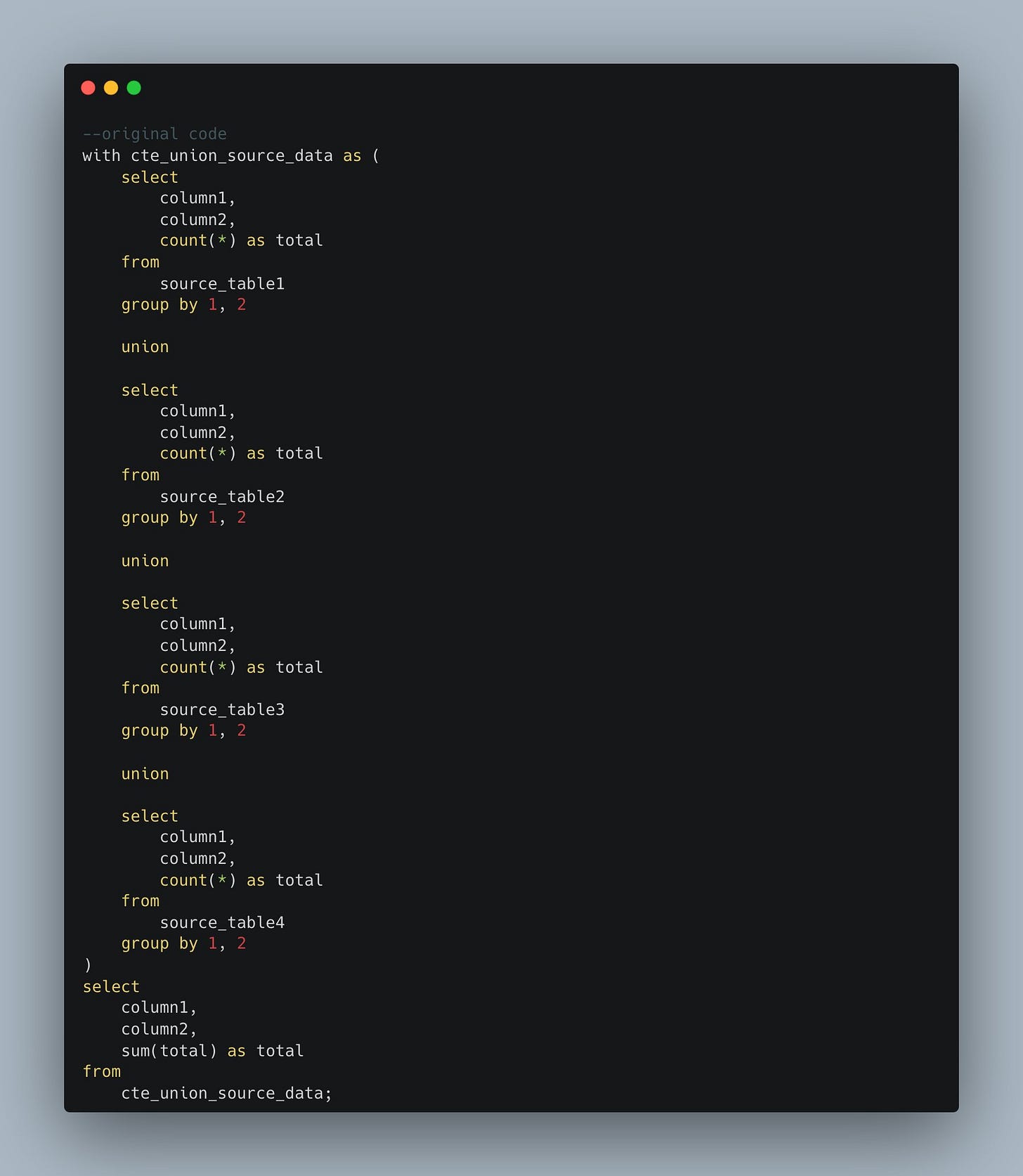

Recently I significantly improved the runtime of a dbt model (SQL query) from 15 minutes to 1 minute with a very simple change. Here's the code.

Can you spot my mistake?

Ok, ok I'll tell you. In the slow query, I'm doing aggregation for every table and then I'm doing a UNION which enforces a DISTINCT operation for the rows. I was aggregating data again in the second step.

These operations by themselves are not that expensive, but when you union together 10 tables with millions of rows each, it can get very computationally complex. So how did I fix it?

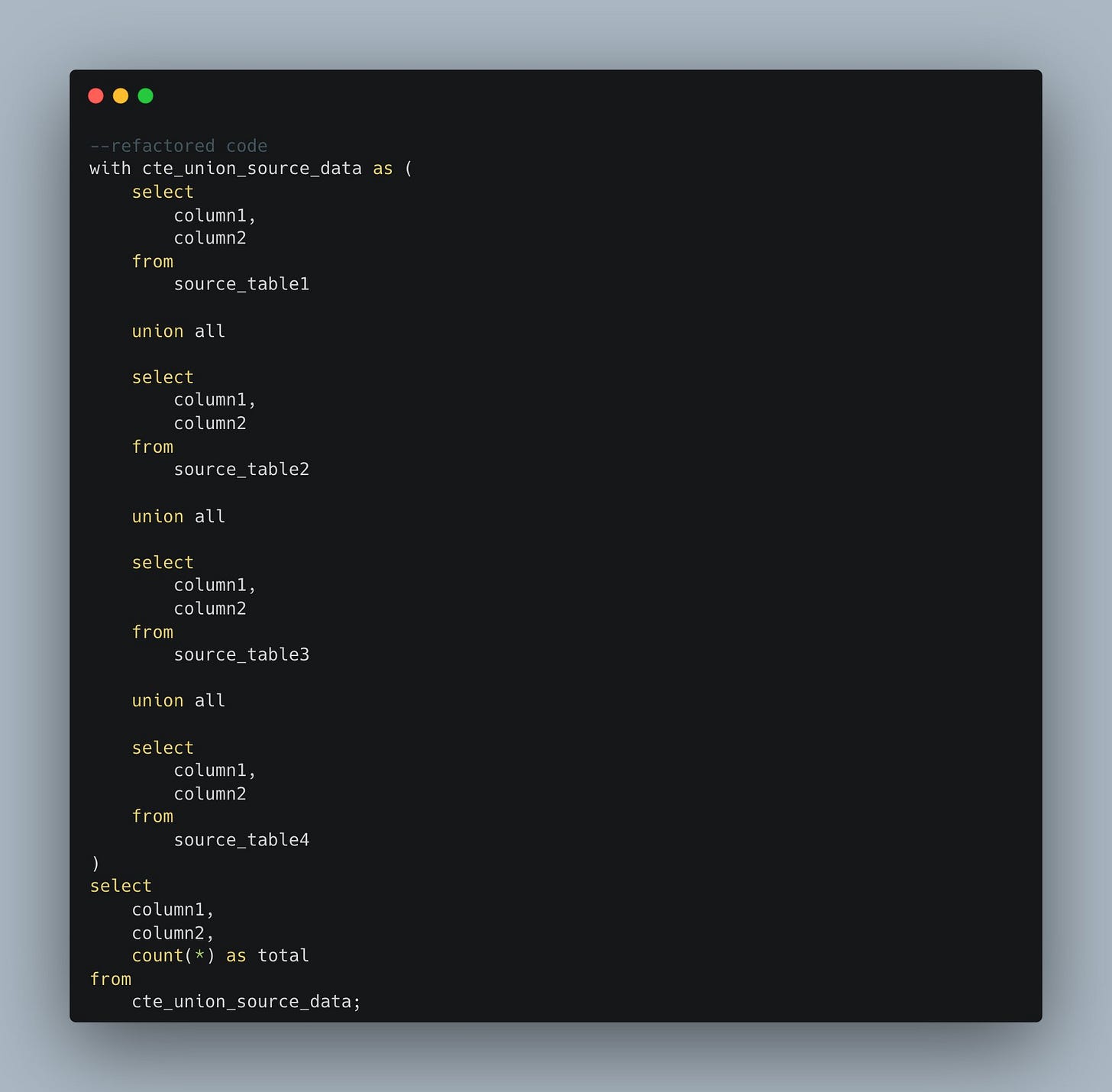

I removed the aggregation on each of the tables, so now it's a simple SELECT. I also changed the UNION to UNION ALL which no longer applies the DISTINCT operation.

Then I changed the aggregation from a SUM to a COUNT. The final result is identical to before but now it runs 15 times faster! Sometimes it's the smallest changes that make the largest difference.

Here’s the final result.

In some databases it might be ok to do the aggregation first because the database can parallelize the operation and it’s on a smaller table so try it both ways and see.

That’s it for today.

Until next time!